Understanding the Embedding Limits in RAG Systems

The realm of artificial intelligence is constantly evolving, yet recent insights from Google DeepMind reveal a significant hurdle: the fundamental bug in Retrieval-Augmented Generation (RAG) systems tied to embedding limits. These systems predominantly employ dense embeddings to translate both queries and documents into manageable vector spaces. However, newer research shows that these systems possess an inherent constraint that simply scaling up models or enhancing training cannot remedy.

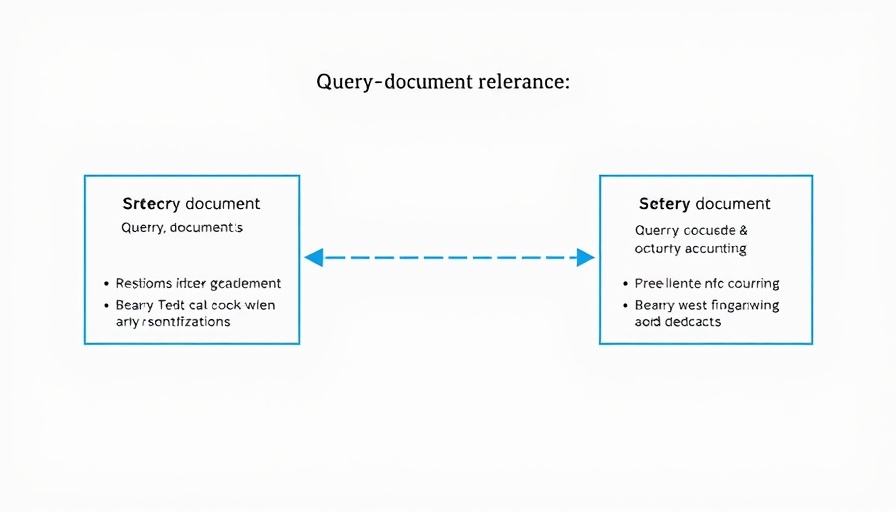

The Problem with Fixed-Dimensional Embeddings

At the core of this issue lies the representational capacity of fixed-size embeddings. Simply put, an embedding of dimension 'd' has limitations on the variety of relevant documents it can represent. For example, research indicates that when embeddings are sized at 512, they struggle to manage more than 500,000 documents effectively. Exceeding this limit leads to a breakdown in retrieval accuracy, which can be devastating for businesses relying on efficient data handling.

For larger embeddings, specifically at 1024-dimensional sizes, the issue extends to around 4 million documents, and at a whopping 4096 dimensions, this capacity rises to a critical ceiling of 250 million documents. However, in real-world applications, language constraints generally cause these systems to falter much earlier.

Introducing the LIMIT Benchmark

To delve into these limitations, the Google DeepMind team unveiled the LIMIT benchmark, a meticulously designed dataset aimed at testing embedders under varying document loads. The benchmark consists of two configurations. The 'LIMIT full', which comprises 50,000 documents, has shown that even top-tier embedders can struggle, with recall rates plummeting below 20% in many instances. In contrast, the smaller configuration, termed 'LIMIT small', features only 46 documents, yet here too, models fail to deliver reliable results.

This stark reality is evident when examining performance metrics such as recall@2 among various models, where even the most sophisticated systems fall short. For instance, the Promptriever Llama3 8B achieved only 54.3% recall, while GritLM 7B and E5-Mistral 7B recorded 38.4% and 29.5% respectively. These findings underscore that the architecture's constraints extend beyond mere scale; it's the nature of single-vector embedding that inherently limits effectiveness.

Why This Matters for Small and Medium Businesses

For small to medium enterprises (SMEs) implementing RAG systems, understanding these limits is crucial. While current RAG methodologies tend to assume that embeddings can endlessly scale with growing databases, this misconception could lead to significant inefficiencies in data retrieval, affecting how businesses operate and compete.

Instead of relying entirely on dense embeddings, businesses might consider integrating classical models such as BM25. Unlike their dense counterparts, these sparse models operate effectively in unbounded dimensional spaces, thereby circumventing the limitations that dense embeddings encounter. This shift could enhance retrieval capabilities and improve overall operational efficiency.

Future Predictions: Innovations Ahead

As the landscape of artificial intelligence progresses, it is likely that new architectural paradigms will emerge. The need for adaptable and efficient retrieval systems will drive further research into innovative models that can navigate the limitations identified by Google DeepMind. SMEs that stay informed about these developments will be better positioned to leverage advancements in AI technology for growth and competitiveness.

Common Misconceptions about AI Embeddings

One prevalent misconception is that simply increasing the embedding size will resolve retrieval issues. While larger embeddings can improve representational capacity, they do not eradicate the fundamental architectural issues that limit performance as noted. Understanding this dynamic is essential for businesses aiming to harness AI effectively.

Actionable Insights for SMEs Using AI

For small and medium businesses looking to maximize their AI tools, it is vital to:

- Investigate alternative models that might suit their data needs better than traditional dense embeddings.

- Stay updated on AI advancements and benchmark studies like LIMIT that elucidate potential pitfalls in current methodologies.

- Engage in continuous learning and training to adapt to new data models and methodologies that can enhance overall business efficiency.

As we navigate the evolving landscape of AI, it’s important for businesses to remain proactive and adaptable. Embracing these changes can open doors to new growth opportunities and better operational practices.

Incorporating this knowledge into your decision-making processes is not just beneficial; it's essential for your business's continued success in a technology-driven world.

Add Row

Add Row  Add

Add

Write A Comment